Understanding Support Vector Machine Kernels can be challenging, especially if you're just starting out with data science in general. But never fear! This article will provide you with an introduction to SVM Kernels especially polynomial kernels, as well as walk you through how to use them in Python from scratch using Pandas, and NumPy. So let's get started

What are Kernels in SVM

SVM is an algorithm that has shown great success in the field of classification. It separates the data into different categories by finding the best hyperplane and maximizing the distance between points. To this end, a kernel function will be introduced to demonstrate how it works with support vector machines. Kernel functions are a very powerful tool for exploring high-dimensional spaces. They allow us to do linear discriminants on nonlinear manifolds, which can lead to higher accuracies and robustness than traditional linear models alone.

The kernel function is just a mathematical function that converts a low-dimensional input space into a higher-dimensional space. This is done by mapping the data into a new feature space. In this space, the data will be linearly separable. This means that a support vector machine can be used to find a hyperplane that separates the data.

For example, if the input 𝑥 is two-dimensional, the kernel function will map it into a three-dimensional space. In this space, the data will be linearly separable.

In addition, they provide more features than those of other algorithms such as neural networks or tree ensembles in some kinds of problems involving handwritten recognition, face detection, etc because they extract intrinsic properties of data points through a kernel function.

Why Kernels?

Kernels are useful because they can be used to separate data that is not linearly separable. When there is a case where the data cannot be separated using a basic SVM algorithm, we can use the kernel trick, which allows more accurate results since the data is being converted to a higher dimension which leads to a new extra dimension for the data to be spread.

Kernels are also useful because they can be used to decrease the errors of the SVM algorithm. The reason for this is that the kernel function can map the data into a higher-dimensional space. In this space, the data will be more linearly separable. This means that the SVM algorithm will be able to find a hyperplane that separates the data with higher accuracy and lower errors.

There are many different kernel functions that can be used. Some of the most common kernel functions are the polynomial kernel, the RBF kernel, and the sigmoid kernel.

The Polynomial Kernel

A polynomial kernel is a kind of SVM kernel that uses a polynomial function to map the data into a higher-dimensional space. It does this by taking the dot product of the data points in the original space and the polynomial function in the new space.

In a polynomial kernel for SVM, the data is mapped into a higher-dimensional space using a polynomial function. The dot product of the data points in the original space and the polynomial function in the new space is then taken. The polynomial kernel is often used in SVM classification problems where the data is not linearly separable. By mapping the data into a higher-dimensional space, the polynomial kernel can sometimes find a hyperplane that separates the classes.

The polynomial kernel has a number of parameters that can be tuned to improve its performance, including the degree of the polynomial and the coefficient of the polynomial.

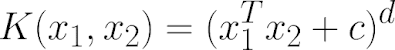

For degree d polynomials, the polynomial kernel is defined as:

where c is a constant and x1 and x2 are vectors in the original space.

The parameter c can be used to control the trade-off between the fit of the training data and the size of the margin. A large c value will give a low training error but may result in overfitting. A small c value will give a high training error but may result in underfitting. The degree d of the polynomial can be used to control the complexity of the model. A high degree d will result in a more complex model that may overfit the data, while a low degree d will result in a simpler model that may underfit the data.

When a dataset is given containing features x1 and x2, the equation can be transformed as:

The important terms we need to note are x1, x2, x1^2, x2^2, and x1 * x2. When finding these new terms, the non-linear dataset is converted to another dimension that has features x1^2, x2^2, and x1 * x2.

Implementing Polynomial Kernel with SVM in Python

Creating the dataset

Alright, now let's do the practical implementation of the polynomial kernel in python. For this demo, we need a random dataset. So let's create a non-linearly separable dataset using sklearn

import numpy as npimport matplotlib.pyplot as pltimport pandas as pdfrom sklearn.datasets import make_circlesX, y = make_circles(n_samples=500, noise=0.13, random_state=42)df = pd.DataFrame(dict(x1=X[:, 0], x2=X[:, 1], y=y))

Now let's plot the dataset

colors = {0:'red', 1:'blue'}fig, ax = plt.subplots()grouped = df.groupby('y')for key, group in grouped:group.plot(ax=ax, kind='scatter', x='x1', y='x2', label=key, color = colors[key])plt.show()

This dataset is not linearly separable since the two classes are intermixed. Here the basic linear SVM will not be able to classify this dataset with high accuracy. So we need to transform this 2D dataset into 3 dimensions.

The SVM Algorithm

In the previous article, we implemented the SVM algorithm from scratch in python, here is the link to the article: Implementing Support Vector Machine Algorithm from Scratch in Python

Now we are going to modify this algorithm for supporting the polynomial kernel function

import numpy as npclass SVM:def __init__(self, C = 1.0):# C = error termself.C = Cself.w = 0self.b = 0# Hinge Loss Function / Calculationdef hingeloss(self, w, b, x, y):# Regularizer termreg = 0.5 * (w * w)for i in range(x.shape[0]):# Optimization termopt_term = y[i] * ((np.dot(w, x[i])) + b)# calculating lossloss = reg + self.C * max(0, 1-opt_term)return loss[0][0]def fit(self, X, Y, batch_size=100, learning_rate=0.001, epochs=1000):# The number of features in Xnumber_of_features = X.shape[1]# The number of Samples in Xnumber_of_samples = X.shape[0]c = self.C# Creating ids from 0 to number_of_samples - 1ids = np.arange(number_of_samples)# Shuffling the samples randomlynp.random.shuffle(ids)# creating an array of zerosw = np.zeros((1, number_of_features))b = 0losses = []# Gradient Descent logicfor i in range(epochs):# Calculating the Hinge Lossl = self.hingeloss(w, b, X, Y)# Appending all losseslosses.append(l)# Starting from 0 to the number of samples with batch_size as intervalfor batch_initial in range(0, number_of_samples, batch_size):gradw = 0gradb = 0for j in range(batch_initial, batch_initial+ batch_size):if j < number_of_samples:x = ids[j]ti = Y[x] * (np.dot(w, X[x].T) + b)if ti > 1:gradw += 0gradb += 0else:# Calculating the gradients#w.r.t wgradw += c * Y[x] * X[x]# w.r.t bgradb += c * Y[x]# Updating weights and biasw = w - learning_rate * w + learning_rate * gradwb = b + learning_rate * gradbself.w = wself.b = breturn self.w, self.b, lossesdef predict(self, X):prediction = np.dot(X, self.w[0]) + self.b # w.x + breturn np.sign(prediction)

Modifying SVM for polynomial features

To bring polynomial features to our SVM algorithm we need to add two things, a new parameter kernel to specify which type of kernel to use and the method that transforms the dataset from a lower dimension to a higher dimension.

# svm.pyclass SVM:def __init__(self, C = 1.0, kernel=None):# C = error termself.C = Cself.w = 0self.b = 0

The new method transform_poly will look like this:

def transform_poly(self, X, Y=None):# Finding the Square of X1, X2X['x1^2'] = X['x1'] ** 2X['x2^2'] = X['x2'] ** 2# Finding the product of X1 and X2X['x1 * x2'] = X['x1'] * X['x2']# Converting dataset to numpy arrayX = X.to_numpy()if Y.size != 0:Y = Y.to_numpy()return X, Yelse:return X

Here we are finding the square and product of features x1 and x2 as we discussed in the equation above

and then converting the panda's data frame to a NumPy array. Finally, the resulting value of X and Y is returned. If there are no Y values only X is returned.

Inside the fit method, we can add the transform_poly method just like this:

def fit(self, X, Y, batch_size = 100, learning_rate = 0.001, epochs = 1000):if(self.kernel == "poly"):print("SVM(kernel='poly')")X, Y = self.transform_poly(X, Y)else:X = X.to_numpy()Y = Y.to_numpy()............

So if we specified SVM with the kernel poly, the data X and Y is transformed from lower dimension to higher dimension

Inside the predict method, we can do the same:

def predict(self, X):if(self.kernel == "poly"):X = self.transform_poly(X, np.array([]))else:X.to_numpy()linear_prediction = np.dot(X, self.w[0]) + self.breturn np.sign(linear_prediction)

That's it! our SVM algorithm is good to go for doing classification.

Performing Classification

# creating X and y valuesX = df[['x1', 'x2']]y = df['y']# Replacing 0 with -1 for the SVM model to recognize labelsy = y.replace(0, -1)

Splitting the dataset

from sklearn.model_selection import train_test_splitX_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.7, random_state=42)

Training and testing the SVM model with linear kernel

# Self Coded SVM algorithmfrom sklearn.metrics import accuracy_scorefrom svm2 import SVMsvm = SVM()w, b, losses = svm.fit(X_train, y_train)pred = svm.predict(X_test)accuracy_score("Accuracy:",pred, y_test)----Accuracy: 0.48

As you can see that the accuracy is below 0.5 when trying to do a linear fit, the accuracy is pretty low in this case, so let's go ahead and train the SVM with a polynomial kernel.

Training and testing the SVM model with polynomial kernel

# Self Coded SVM algorithmfrom sklearn.metrics import accuracy_scorefrom svm2 import SVMsvm = SVM(kernel="poly")w, b, losses = svm.fit(X_train, y_train)pred = svm.predict(X_test)accuracy_score("Accuracy:",pred, y_test)---Accuracy: 0.7657142857142857

That's nice! the accuracy of the model increased from 0.48 to 0.76 when doing a polynomial fit instead of a linear fit.

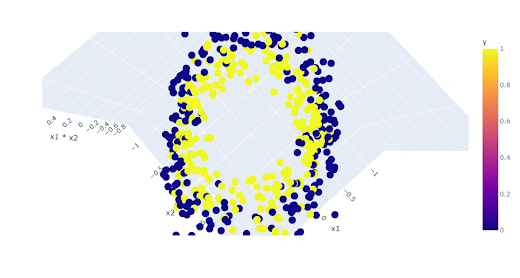

Visualization

Before transforming the dataset:

import plotly.express as pxdf['x1^2'] = df['x1'] ** 2df['x2^2'] = df['x2'] ** 2df['x1 * x2'] = df['x1'] * df['x2']fig = px.scatter_3d(df, x='x1', y='x2', z='x1 * x2', color='y')fig.show()

After transforming the dataset

fig = px.scatter_3d(df, x='x1^2', y='x2^2', z='x1 * x2', color='y')fig.show()

Articles to Read:

.png)