Introduction

Support vector machines are among the most powerful and practical machine learning algorithms ever designed. They can be used to predict outcomes of marketing campaigns, predict user behavior on websites, accurately predict results in medical tests, and much more. In this article, we’re going to focus on the concept of a primal support vector machine (SVM) in detail including its math and crazy derivations. So let's get into it.

What is a Primal Support Vector Machine?

The thing you need to understand is that SVM is so powerful that it can even be used in more than 2-dimensions. In some cases, the data points encountered in a problem can be linearly separable or non-linearly separable,

If the data points of classes are linearly separable, we can simply formulate the optimization function using the basic SVM which is known as the Primal formulation of SVM. But when the data points are not linearly separable the Primal formulation simply doesn't work, Here we need to use something known as the Dual Form of SVM that deals with multiple dimensions. But in this article, we are focusing on the basic concept of SVM, ie, the Primal formulation of SVM.

Some important SVM terms

.png) |

| SVM Diagram |

Hyperplane

A hyperplane is a decision boundary that can be used to classify data. In the support vector machine algorithm, a hyperplane is created by finding a line that best separates the data. When the decision boundary is more than 2-dimensional, it is called a hyperplane.

Support Vectors

The support vectors in a support vector machine algorithm are the data points that lie closest to the separating line between the two classes. These vectors are used to calculate the optimal decision boundary for a classifier. When performing classification using the Support Vector Machine algorithm, the algorithm finds the hyperplane that best separates the two classes of data, and the support vectors are the points on the hyperplane that is closest to the points in the training data.

Marginal Distance

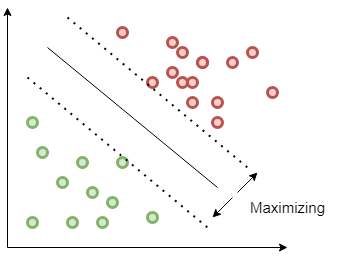

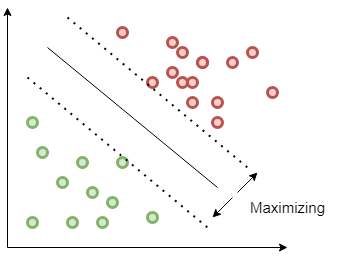

The marginal distance in the Support Vector Machine algorithm is the distance between the closest point in the training data set and the decision boundary. Increasing the margin distance can lead to improved generalization performance, ie, better out-of-sample prediction.

What is the goal of SVM?

Alright, we had a quick overview on the Primal form of SVM and some important terms regarding SVM, but what is the actual goal of SVM? The main goal of SVM is the maximization of margins between two different classes. That means that you want to make sure that as many points in one class are on one side of the decision boundary and as many points in the other class are on the other side.

When this happens, all points with a higher degree of separation will be correctly classified while all points with a lower degree of separation will be misclassified.

So when the marginal distance between these two classes is at their maximum, they become the optimal solution for maximizing margin and minimizing risk. As such, it becomes a lot easier to classify new points without any error because they can just be placed on either side of the decision boundary based on which class it belongs to. If there are errors though then there's always something called regularization which allows us to penalize models so that they generalize better for new data points.

Separating Hyperplane

A Hyperplane as we said is a line that separates the data points into two sets. The points that are on one side of the line will be in one set, and the points that are on the other side of the line will be in another set. But how can we find the equation of the hyperplane? As we know in linear and logistic regression the equation for the best fit line will be y = mx + b, where m is the slope, and b is the intercept. But in SVM we need to formulate the same for the marginal plane and for both negative and positive hyperplanes as shown in the figure (SVM Diagram);

For the marginal plane, we can write the equation as:

For the positive hyperplane the equation will be:

And for negative hyperplane:

Deriving Marginal Distance

So we have the equation of hyperplanes, by using these equations we can derive the equation for the width or the distance between positive and negative hyperplanes. The equation for marginal distance is derived below:

Now we need to remove w transpose so that we'll get the equation for (x1 - x2), But since w transpose is a vector and it has a direction we need to divide the equation with the norm of w,

Remember we said that the goal of SVM is to maximize the marginal distance between two classes. So here in order to maximize the marginal distance, we can maximize the equation we got subject to some constraints, ie,

Here the condition for maximization is that whenever the value of the equation is greater than or equal to one we need to consider the y value as +1, and whenever it is less than or equal to -1 we need to consider the y value as -1. This equation that needs to be maximized is known as the regularizer.

Soft margin SVM

For performing optimization using gradient descent the regularizer can also be rewritten as follows:

But how did we come up with this equation? Well, it is just by doing the reciprocal of the equation. When considering the reciprocal, the maximization term will be changed to minimization.

There are two more terminologies we need to include in this regularizer for formulating the optimization equation and those are the error terms including the number of errors in the training(C) and the sum of the value of error( Σζ ). So the optimization term will be:

This term allows some classification errors to occur for avoiding overfitting of our model, ie, the hyperplane will not be changed if there are small errors in classification. This model is known as a

Soft Margin SVM

Loss Function: Soft Margin SVM

Now let us consider another approach for deriving the optimization term including the loss function for SVM. In the case of

Linear Regression, we used squared errors as the loss function, but in classification algorithms like SVM, it's not suitable to use squared errors. So what will be the loss function for SVM?

One approach is when the model predicts a value ŷ for a given x and labels y, we can consider the error to be zero when both of them match else one. This approach is known as zero-one loss. But this loss function is used in Combinatorial Optimization problems which is the subfield of mathematical optimization. Using this loss in SVM is really challenging.

Is there any other loss function for SVM? Yes! it's the Hinge Loss function:

If the value of t ≥ 1, f(x), ie, the hinge loss will be zero for correct prediction. If t ≤ 0, the hinge loss returns a value of 1 or larger. By using this hinge loss function it gives an unconstrained optimization term:

The first term is known as the regularization term as we discussed and the second term is the loss term of the error term. Using Gradient Descent we can find the best parameters w and b which we'll discuss in the next article.

If you have any queries don't hesitate to post them in the comment box.

.png)

.png)

.png)